A Coding Implementation on Building Self-Organizing Zettelkasten Knowledge Graphs and Sleep-Consolidation Mechanisms

In this tutorial, we dive into the cutting edge of Agentic AI by building a “Zettelkasten” memory system, a “living” architecture that organizes information much like the human brain. We move beyond standard retrieval methods to construct a dynamic knowledge graph where an agent autonomously decomposes inputs into atomic facts, links them semantically, and even “sleeps” to consolidate memories into higher-order insights. Using Google’s Gemini, we implement a robust solution that addresses real-world API constraints, ensuring our agent stores data and also actively understands the evolving context of our projects. Check out the FULL CODES here.

import os

import json

import uuid

import time

import getpass

import random

import networkx as nx

import numpy as np

import google.generativeai as genai

from dataclasses import dataclass, field

from typing import List

from sklearn.metrics.pairwise import cosine_similarity

from IPython.display import display, HTML

from pyvis.network import Network

from google.api_core import exceptions

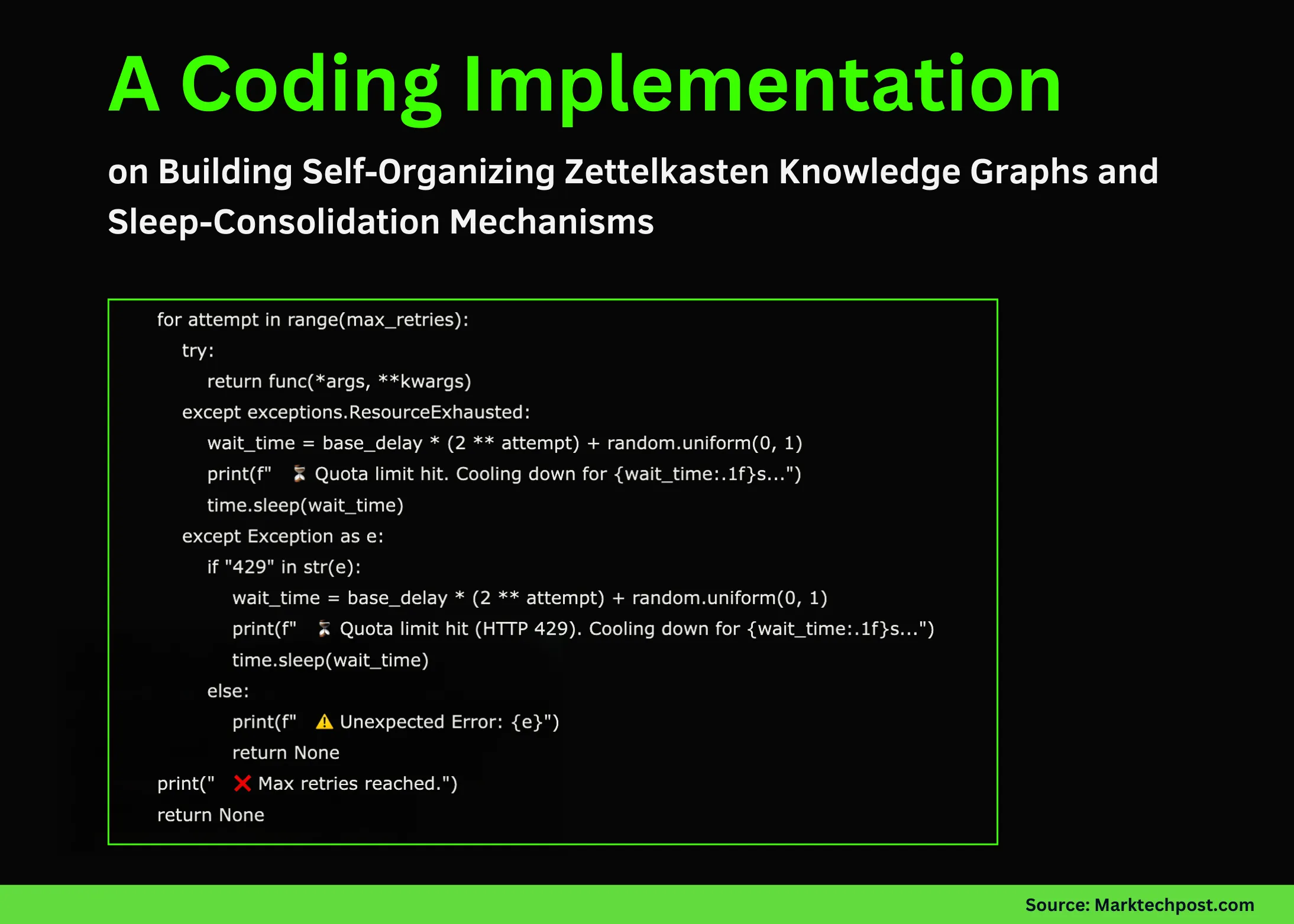

def retry_with_backoff(func, *args, **kwargs):

max_retries = 5

base_delay = 5

for attempt in range(max_retries):

try:

return func(*args, **kwargs)

except exceptions.ResourceExhausted:

wait_time = base_delay * (2 ** attempt) + random.uniform(0, 1)

print(f” ⏳ Quota limit hit. Cooling down for {wait_time:.1f}s…”)

time.sleep(wait_time)

except Exception as e:

if “429” in str(e):

wait_time = base_delay * (2 ** attempt) + random.uniform(0, 1)

print(f” ⏳ Quota limit hit (HTTP 429). Cooling down for {wait_time:.1f}s…”)

time.sleep(wait_time)

else:

print(f” ⚠️ Unexpected Error: {e}”)

return None

print(” ❌ Max retries reached.”)

return None

print(“Enter your Google AI Studio API Key (Input will be hidden):”)

API_KEY = getpass.getpass()

genai.configure(api_key=API_KEY)

MODEL_NAME = “gemini-2.5-flash”

EMBEDDING_MODEL = “models/text-embedding-004″

print(f”✅ API Key configured. Using model: {MODEL_NAME}”)

We begin by importing essential libraries for graph management and AI model interaction, while also securing our API key input. Crucially, we define a robust retry_with_backoff function that automatically handles rate limit errors, ensuring our agent gracefully pauses and recovers when the API quota is exceeded during heavy processing. Check out the FULL CODES here.

class MemoryNode:

id: str

content: str

type: str

embedding: List[float] = field(default_factory=list)

timestamp: int = 0

class RobustZettelkasten:

def __init__(self):

self.graph = nx.Graph()

self.model = genai.GenerativeModel(MODEL_NAME)

self.step_counter = 0

def _get_embedding(self, text):

result = retry_with_backoff(

genai.embed_content,

model=EMBEDDING_MODEL,

content=text

)

return result[’embedding’] if result else [0.0] * 768

We define the fundamental MemoryNode structure to hold our content, types, and vector embeddings in an organized data class. We then initialize the main RobustZettelkasten class, establishing the network graph and configuring the Gemini embedding model that serves as the backbone of our semantic search capabilities. Check out the FULL CODES here.

prompt = f”””

Break the following text into independent atomic facts.

Output JSON: {{ “facts”: [“fact1”, “fact2”] }}

Text: “{text}”

“””

response = retry_with_backoff(

self.model.generate_content,

prompt,

generation_config={“response_mime_type”: “application/json”}

)

try:

return json.loads(response.text).get(“facts”, []) if response else [text]

except:

return [text]

def _find_similar_nodes(self, embedding, top_k=3, threshold=0.45):

if not self.graph.nodes: return []

nodes = list(self.graph.nodes(data=True))

embeddings = [n[1][‘data’].embedding for n in nodes]

valid_embeddings = [e for e in embeddings if len(e) > 0]

if not valid_embeddings: return []

sims = cosine_similarity([embedding], embeddings)[0]

sorted_indices = np.argsort(sims)[::-1]

results = []

for idx in sorted_indices[:top_k]:

if sims[idx] > threshold:

results.append((nodes[idx][0], sims[idx]))

return results

def add_memory(self, user_input):

self.step_counter += 1

print(f”\n🧠 [Step {self.step_counter}] Processing: \”{user_input}\””)

facts = self._atomize_input(user_input)

for fact in facts:

print(f” -> Atom: {fact}”)

emb = self._get_embedding(fact)

candidates = self._find_similar_nodes(emb)

node_id = str(uuid.uuid4())[:6]

node = MemoryNode(id=node_id, content=fact, type=”fact”, embedding=emb, timestamp=self.step_counter)

self.graph.add_node(node_id, data=node, title=fact, label=fact[:15]+”…”)

if candidates:

context_str = “\n”.join([f”ID {c[0]}: {self.graph.nodes[c[0]][‘data’].content}” for c in candidates])

prompt = f”””

I am adding: “{fact}”

Existing Memory:

{context_str}

Are any of these directly related? If yes, provide the relationship label.

JSON: {{ “links”: [{{ “target_id”: “ID”, “rel”: “label” }}] }}

“””

response = retry_with_backoff(

self.model.generate_content,

prompt,

generation_config={“response_mime_type”: “application/json”}

)

if response:

try:

links = json.loads(response.text).get(“links”, [])

for link in links:

if self.graph.has_node(link[‘target_id’]):

self.graph.add_edge(node_id, link[‘target_id’], label=link[‘rel’])

print(f” 🔗 Linked to {link[‘target_id’]} ({link[‘rel’]})”)

except:

pass

time.sleep(1)

We construct an ingestion pipeline that decomposes complex user inputs into atomic facts to prevent information loss. We immediately embed these facts and use our agent to identify and create semantic links to existing nodes, effectively building a knowledge graph in real time that mimics associative memory. Check out the FULL CODES here.

print(f”\n💤 [Consolidation Phase] Reflecting…”)

high_degree_nodes = [n for n, d in self.graph.degree() if d >= 2]

processed_clusters = set()

for main_node in high_degree_nodes:

neighbors = list(self.graph.neighbors(main_node))

cluster_ids = tuple(sorted([main_node] + neighbors))

if cluster_ids in processed_clusters: continue

processed_clusters.add(cluster_ids)

cluster_content = [self.graph.nodes[n][‘data’].content for n in cluster_ids]

prompt = f”””

Generate a single high-level insight summary from these facts.

Facts: {json.dumps(cluster_content)}

JSON: {{ “insight”: “Your insight here” }}

“””

response = retry_with_backoff(

self.model.generate_content,

prompt,

generation_config={“response_mime_type”: “application/json”}

)

if response:

try:

insight_text = json.loads(response.text).get(“insight”)

if insight_text:

insight_id = f”INSIGHT-{uuid.uuid4().hex[:4]}”

print(f” ✨ Insight: {insight_text}”)

emb = self._get_embedding(insight_text)

insight_node = MemoryNode(id=insight_id, content=insight_text, type=”insight”, embedding=emb)

self.graph.add_node(insight_id, data=insight_node, title=f”INSIGHT: {insight_text}”, label=”INSIGHT”, color=”#ff7f7f”)

self.graph.add_edge(insight_id, main_node, label=”abstracted_from”)

except:

continue

time.sleep(1)

def answer_query(self, query):

print(f”\n🔍 Querying: \”{query}\””)

emb = self._get_embedding(query)

candidates = self._find_similar_nodes(emb, top_k=2)

if not candidates:

print(“No relevant memory found.”)

return

relevant_context = set()

for node_id, score in candidates:

node_content = self.graph.nodes[node_id][‘data’].content

relevant_context.add(f”- {node_content} (Direct Match)”)

for n1 in self.graph.neighbors(node_id):

rel = self.graph[node_id][n1].get(‘label’, ‘related’)

content = self.graph.nodes[n1][‘data’].content

relevant_context.add(f” – linked via ‘{rel}’ to: {content}”)

context_text = “\n”.join(relevant_context)

prompt = f”””

Answer based ONLY on context.

Question: {query}

Context:

{context_text}

“””

response = retry_with_backoff(self.model.generate_content, prompt)

if response:

print(f”🤖 Agent Answer:\n{response.text}”)

We implement the cognitive functions of our agent, enabling it to “sleep” and consolidate dense memory clusters into higher-order insights. We also define the query logic that traverses these connected paths, allowing the agent to reason across multiple hops in the graph to answer complex questions. Check out the FULL CODES here.

try:

net = Network(notebook=True, cdn_resources=”remote”, height=”500px”, width=”100%”, bgcolor=”#222222″, font_color=”white”)

for n, data in self.graph.nodes(data=True):

color = “#97c2fc” if data[‘data’].type == ‘fact’ else “#ff7f7f”

net.add_node(n, label=data.get(‘label’, ”), title=data[‘data’].content, color=color)

for u, v, data in self.graph.edges(data=True):

net.add_edge(u, v, label=data.get(‘label’, ”))

net.show(“memory_graph.html”)

display(HTML(“memory_graph.html”))

except Exception as e:

print(f”Graph visualization error: {e}”)

brain = RobustZettelkasten()

events = [

“The project ‘Apollo’ aims to build a dashboard for tracking solar panel efficiency.”,

“We chose React for the frontend because the team knows it well.”,

“The backend must be Python to support the data science libraries.”,

“Client called. They are unhappy with React performance on low-end devices.”,

“We are switching the frontend to Svelte for better performance.”

]

print(“— PHASE 1: INGESTION —“)

for event in events:

brain.add_memory(event)

time.sleep(2)

print(“— PHASE 2: CONSOLIDATION —“)

brain.consolidate_memory()

print(“— PHASE 3: RETRIEVAL —“)

brain.answer_query(“What is the current frontend technology for Apollo and why?”)

print(“— PHASE 4: VISUALIZATION —“)

brain.show_graph()

We wrap up by adding a visualization method that generates an interactive HTML graph of our agent’s memory, allowing us to inspect the nodes and edges. Finally, we execute a test scenario involving a project timeline to verify that our system correctly links concepts, generates insights, and retrieves the right context.

In conclusion, we now have a fully functional “Living Memory” prototype that transcends simple database storage. By enabling our agent to actively link related concepts and reflect on its experiences during a “consolidation” phase, we solve the critical problem of fragmented context in long-running AI interactions. This system demonstrates that true intelligence requires processing power and a structured, evolving memory, marking the way for us to build more capable, personalized autonomous agents.

Check out the FULL CODES here. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.